- 30 September 2021

- Benjamin Anuworakarn

You may have noticed that in real life, ambient light will have a harder time reaching into some surfaces than others, such as in the creases of your clothes or where the inside corners of a wall meet the ceiling. This means that the amount of ambient light that reaches a point on a surface can be estimated as a function of the surrounding local geometry; this estimation is called ambient occlusion.

Ambient occlusion is a seemingly simple feature of lighting in the physical world, but is deceptively complex to implement in computer graphics. In this blog post, we’ll cover some of the algorithms used on desktop environments, one that can be used on mobile, and the optimisations you can take to improve that algorithm. We even have a code example of this coming out in our 21.2 release of the SDK, which is coming very soon, so be sure to subscribe to the newsletter and check back here regularly to find out when that drops. In the meantime, this blog post will go over some of the research and concepts that were explored during the development of said code example. We hope you’ll find this interesting!

Ambient Occlusion Algorithms

There are four main algorithms currently being used in 3D graphics today;

Screen space ambient occlusion

Horizon-based ambient occlusion

Voxel-accelerated ambient occlusion

Ray-traced ambient occlusion

These are presented in order of complexity and resource demand – screen space ambient occlusion (from here, referred to as SSAO) is much simpler and lightweight than voxel-accelerated ambient occlusion. Specialised hardware is required for a ray-traced approach to be practical, and we hope to bring you a demonstration of this in the future.

For the purposes of simplicity, we’ll be focusing on SSAO for now. SSAO works by first taking a Gbuffer pass – this allows the geometry in a scene to be estimated by information about the scene’s normals and position data. For each fragment, we then take pseudo-randomly distributed samples in a neighbourhood around that fragment’s view space position; each point in that neighbourhood that is inside of the scene geometry counts towards the amount of occlusion for that fragment.

A naïve implementation

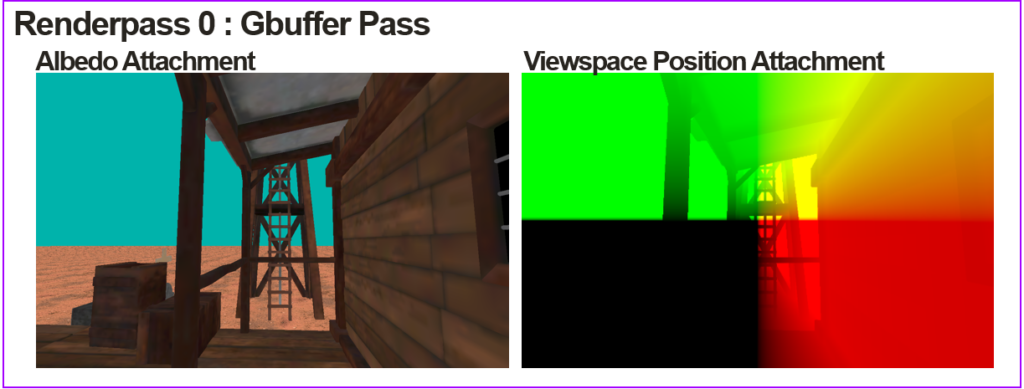

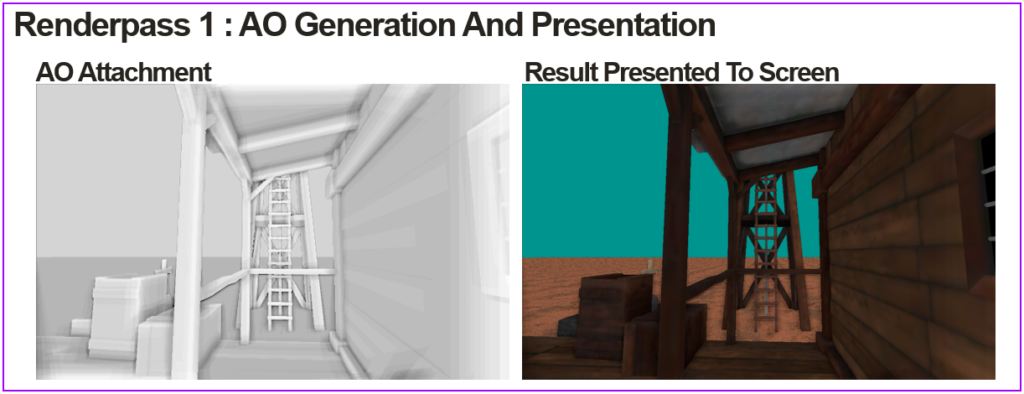

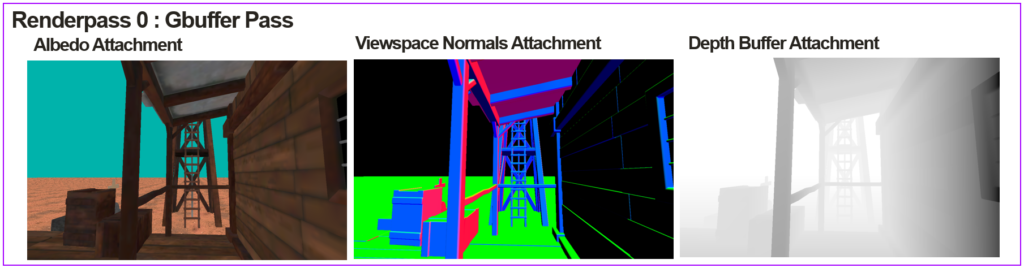

SSAO naturally lends itself to a deferred rendering pipeline; calculating the view space positions, using that data to generate the SSAO, and then compositing the ambient lighting factor with the albedo texture allows us to generate a fully-shaded scene.

Vulkan requires a user to segment their rendering work into subpasses – those subpasses are then grouped into renderpasses. In the simplest case, the user has one renderpass with just one subpass. Using subpasses allows a user to chain together pieces of rendering work in an efficient manner, as long as they target the same framebuffer object.

Multiple subpasses should be part of the same render pass – this allows the GPU to pass fragment data to the next subpass as an input attachment using local pixel storage. This drastically lowers the amount of bandwidth and texture processing required. However, in this case, it is not possible to use subpasses for every render pass; this is because subpasses only allow for reading the input attachment at the exact same fragment coordinate as the currently executing fragment. The SSAO algorithm requires accessing the estimated geometry data in a radius around the current fragment, and so multiple different render passes are required. For the naïve implementation, at least two render passes are required; these are shown below.

The first render pass generates a Gbuffer. This Gbuffer contains an albedo attachment and a view space position attachment. Using a view space position attachment uses a lot of bandwidth; this requires optimisation which we will discuss later. These attachments will then be used as inputs into the next renderpass.

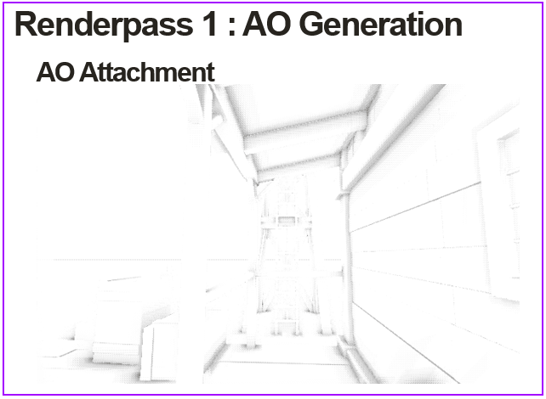

The second render pass contains two subpasses; the first generates the ambient occlusion texture, and the second receives this texture via local pixel storage and composites this with the albedo texture. The final image is then presented to the screen.

Generating the ambient occlusion texture

To start off, we’ll look at a basic and straightforward implementation of ambient occlusion. This will serve as the baseline for an improved version later on.

The first step is to generate a uniform, randomly distributed sphere of samples. These samples are sent to a uniform buffer object, which will be used to compare the scene depth. After this, the next step is to draw a screen space effect to a blank render target. Then, for each fragment, generate the ambient occlusion. Instead of submitting a screenspace quad, the easiest way to do this is to draw a single, massive triangle that covers the whole attachment. The texture coordinates passed from the triangle will be interpolated, and for each invocation of the fragment shader, the texture coordinate will represent the normalised (0,1) coordinates of the current fragment.

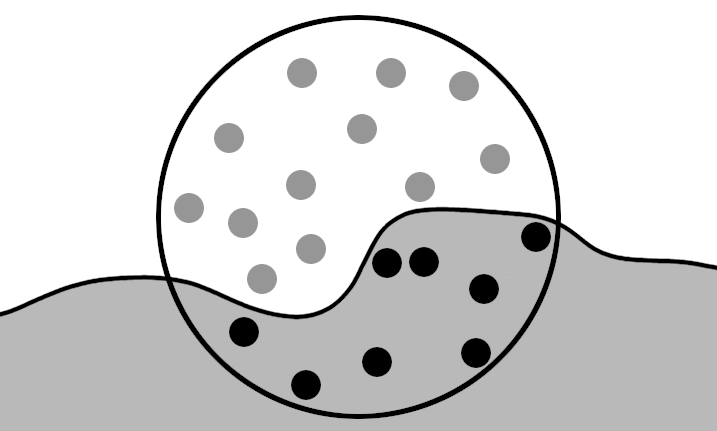

For each fragment in the ambient occlusion texture, we then sample the view space position texture at the same coordinate as the currently executing fragment. This gives us the view space position of the current fragment. After this, for each sample in the uniform buffer, the sample’s offset is added to the fragment’s view space position to generate the sample’s view space position. The projection matrix is then used to transform the sample into screen space; by reading the view space position’s texture at the sample’s screen space coordinates, we get the view space position for the scene at the same location as the sample. The z values of these two view space coordinates represent the depth from the camera’s view. If the sample has a higher depth value than the scene, then this sample is inside the scene’s geometry and thus contributes to the amount that this fragment is occluded.

In the above figure, the occlusion factor would be calculated to be 8/20 = 0.4, but as the desired result is to have more occluded fragments getting shaded to be darker, the value written to the texture would actually be 1 – 0.4 = 0.6.

Flaws in the naïve implementation

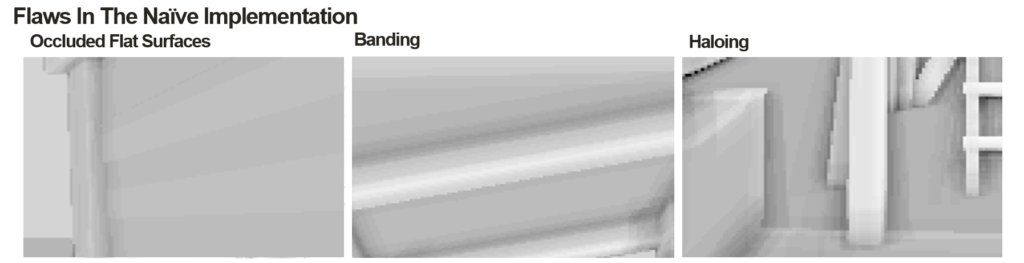

This basic implementation has a few issues and can lead to some visual artefacts, which may make it unsuitable for use. The first problem is that flat surfaces will tend to get an unnaturally large amount of occlusion due to the sampling sphere placing half of the samples behind the surface.

As well as this, points near each other on a surface will likely have similar geometry. Because the occlusion is calculated as a function of the geometry, samples used for each fragment being identical can result in fragments having a similar occlusion to their surrounding fragments. At scale, this results in occlusion being grouped together to form banding.

Changes to the scene depth around edges are also not properly handled. For example, in the case where a plane is placed directly in front of another object in view space, when attempting to sample for the fragments of the scene which are close to the edge of the plane, all samples behind the plane are considered to be inside the geometry, which may not be the case. The result of this is unnecessary occlusion around the edges of the plane, resulting in an effect called haloing.

Next, we can look at some of the ways to address these issues and make the algorithm more robust.

Technique Improvements

Normal-orientated hemisphere sampling

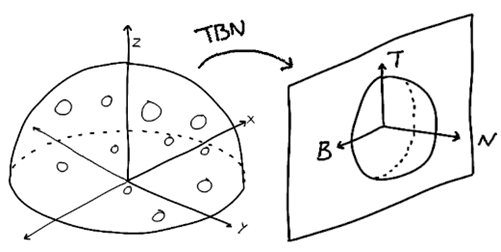

One of the biggest visual flaws with the basic implementation is that even flat surfaces which shouldn’t be occluded end up having half of their samples fall within the terrain. As a result, the general shading looks darker than it should, which feels unnatural. The solution to this problem is to only take samples in a hemisphere, and then orientate those hemispheres so that they are aligned to the view space normals of the surface. This can be done by transferring the samples from tangent space using a TBN matrix.

Randomly rotating samples

Banding is the result of not taking enough samples to differentiate the amount of occlusion of nearby surfaces. By randomly rotating the samples for each fragment, it is possible to make up for this undersampling. The rotations are produced by creating randomly distributed vectors on the unit circle with z component 0. This way, when creating the TBN matrix for the rotation samples, the tangent vector can be chosen to be the vector perpendicular to the normal vector, skewed by the random vector. The result is that the TBN matrix also rotates the samples around the z-axis in tangent space before moving them to view space.

An infinite number of random samples cannot be generated in the fragment shader. Therefore, the best result is to generate a 2D uniform buffer with a set radius of random rotations. The random vector chosen is then decided by taking the currently executing fragment coordinates, taking the modulo of the uniform buffer size, and then using the result as an index.

Using a repeating random vector choice results in an interference pattern on the ambient occlusion texture. As a result of this, a blurring render pass needs to be added. The kernel for the blurring render pass needs to be the same size as the random rotations buffer. This is because the repeated use of the same random rotations causes an interference pattern, the same size as the rotations buffer; this interference pattern can be cancelled out by taking averages over over a radius the same size. Thus, it is preferable to minimise the size of the random rotations buffer.

Range Check

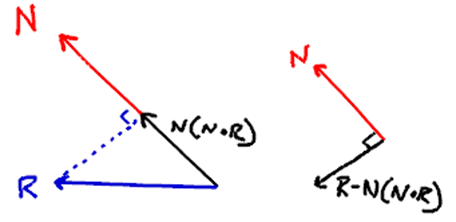

The easiest way to reduce haloing is to stop samples from being compared to scenery outside the sampling radius. A simple if statement would result in an effect that looks unnatural, so instead, the best approach is to multiply the effect on the occlusion of this sample with the reciprocal of the distance between the fragment we are generating occlusion for and the scene depth that the sample is being compared against.

This factor needs to be bound between 0 and 1. Fortunately, this is doable using smoothstep. Given that a sample is detected to be inside the scene geometry, the amount that sample contributes to the overall occlusion can be calculated as:occlusion += smoothstep(0.0, 1.0, 0.1 / abs(sceneDepth - fragPos.z))

Where sceneDepth is the depth of the scene for the corresponding sample, and fragPos.z is the depth of the currently executing fragment.

Mobile optimisations

One potential bottleneck for this application would be texture processing overload. The application can end up making too many requests to the texture processing unit (TPU), thus filling the queue, and causing the renderer to wait for the queue to have available space. Here, we’ll go over some potential ways of reducing this problem.

Separable blur

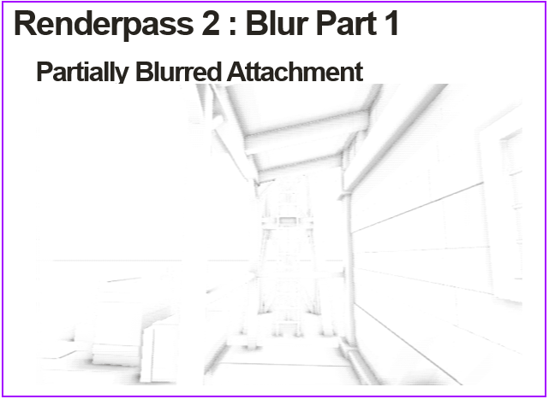

The blurring kernel must have the same size as the random rotations kernel – in the current implementation, with a blur kernel of radius n, this means that the blur pass needs n2 calls to the texture function per fragment. This can be reduced by separating the blur subpass into two separate render passes. The first pass blurs the ambient occlusion texture horizontally, whereas the second pass blurs vertically. This reduces the number of texture accesses to 2n per fragment.

Reduced resolution for off-screen render targets

The production of the ambient occlusion texture is by far the heaviest workload in the program, even after optimisation. Rather than reducing the amount of work done per fragment, the number of fragments being processed can be reduced by downscaling the render target. This has a minimal effect on the end results of the deferred shading applied by the ambient occlusion texture. This is because of the subsequent blur passes; and in the case of mobile devices where the screens are small, the loss of detail is even less noticeable.

It is also possible to combine the separation of blur passes with reducing the resolution. The first blur render pass can be performed at half resolution, whereas the second blur pass can be done at full resolution. By doing the second pass at full resolution, it can act as an upscaling pass, while also allowing the finalised ambient occlusion texture to be passed to the composition render pass via local pixel storage.

View space texture reconstruction

By reducing the amount of data that is used per fragment in the input textures, the amount of processing per texture fetch in the ambient occlusion pass can be reduced even further. This can be done by using the depth buffer instead of a view space texture. The ambient occlusion pass has access to the screen space coordinates whenever it accesses the view space texture. By using the view depth from the depth buffer, the screen space’s (x,y) coordinates and the inverse projection matrix can be used to reconstruct the view space coordinates. This reduces the input texture requirements from three floats per fragment to just one.

The texture containing the view space coordinates can also be reconstructed from the depth buffer by taking multiple samples. This can still result in a reduction in texture processing – the samples can be cached because they are taken close to each other. However, for mobile devices, there is a trade-off: this uses more fragment processing to reduce texture processing. In the code example, we are already relying heavily on fragment processing due to the use of the projection matrix in the fragment shader. As a result, the normal buffer has been kept for this particular case.

Finalised pipeline

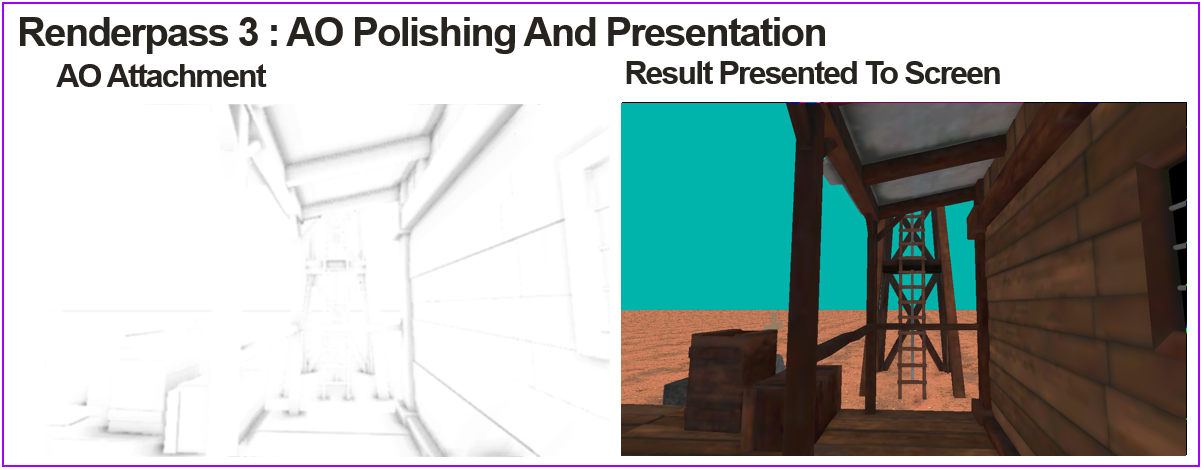

An expanded pipeline that produces a better output can now be described:

First, generate a GBuffer. This has an albedo, normal, and depth attachment. These are used as the inputs to the rest of the pipeline.

Next, using the improved methods, generate an ambient occlusion texture, which is rendered at half resolution. This texture will have noise from the random rotations.

Take the output from the scaled down ambient occlusion renderpass, and perform one part of the gaussian blur; in this case the texture is only being blurred across one axis. This is still being rendered at half resolution.

Finally, is the last renderpass, which has two subpasses. The first subpass completes the second part of the gaussian blur on the AO texture, by blurring the texture from the previous pass in the opposite direction. In the process of this blur, the image is also upscaled from half resolution, up to the same size as the swap chain image. This is so the AO texture can be passed to the final subpass via pixel local storage. The final subpass composites the ambient occlusion with the albedo attachment to produce the final image.

Closing

Ambient occlusion is a field that has been explored and studied in-depth by many people in the computer graphics space, and we hope that this article has made the concept more accessible to those who are interested in some of the challenges that come with producing realistic-looking effects. We’re very proud of our PowerVR SDK and its code examples, and this is just the first of many case studies into how we build them – as mentioned at the beginning, the code example for Ambient Occlusion will be coming as part of the 21.2 Tools and SDK Release, which we’ll be making public soon, so check back for that. Shortly after, we’ll continue publishing some of the research material for our other code examples as well.

If you’re interested in finding out more about various graphics techniques and their implementation, feel free to take a look at our Documentation site, or explore some of the other code examples we have in our SDK on Github.