- 25 September 2017

- Imagination Technologies

My mother is a retired nurse. She’s an intelligent woman, and when it comes to caring for patients, really knows her stuff. The other day I told her I was working on launching Imagination’s new neural network accelerator. “What do you mean?” she asked – having, of course, only ever really thought about neural networks when doing her surgical rotation in nursing school, or perhaps later, when caring for Alzheimer’s patients. So I explained to her that I was talking about artificial intelligence – not the human brain – and I decided right then to write a brief overview of neural networks that my mother (and your non-tech relatives) can understand and link to some additional reading that will expand on the world of AI.

What is a neural network?

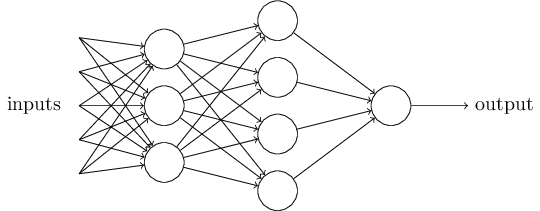

If you look up “what is a neural network?” on Google (it pops up quickly – this is clearly a much-asked question), you will find a lot of scholarly articles that show you the below picture (or similar) and talk about how neural networks function. There are a lot of technical terms like ‘perceptron’ and ‘back propagation’, and if you’re not predisposed to math or engineering, your eyes may glaze over after the first few paragraphs.

While those of us who aren’t engineers (like me) may never truly understand how these algorithms work (and I am not sure even the techiest among us truly understand that – check out the black box problem) – there is a level of information about neural networks that would be useful for us to comprehend.

While those of us who aren’t engineers (like me) may never truly understand how these algorithms work (and I am not sure even the techiest among us truly understand that – check out the black box problem) – there is a level of information about neural networks that would be useful for us to comprehend.

We need this understanding because neural networks are changing life as we know it! I’m not talking about Skynet or VIKI (although I not saying these scenarios are out of the question), I am talking about the things we do every day; from technologies that are changing our lifestyles (e.g. smart speakers/assistants; shopping; photo editing; fraud detection), to technologies that are turning entire industries on their heads, such as autonomous vehicles. Then there are the technologies that can dramatically change global societies (e.g. genomic medicine) and many, many things we haven’t yet conceived.

There is a good article by Ophir Tanz (@OphirTanz) and Cambron Carter at TechCrunch that breaks down the “how does a neural network work?” question for us (thanks, guys!) but what’s still lacking in a broad sense is a simple overview of the terminology around neural networks in words we can understand.

Breaking it down

So what exactly is Artificial intelligence (AI)? I went straight to the Oxford dictionary for this one: AI is ‘the theory and development of computer systems able to perform tasks normally requiring human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages.’ There are several subsets of AI, including robotics, machine learning, natural language processing and others. The field of AI research officially kicked off during a workshop at Dartmouth College in 1956. I won’t go into history here but would encourage you to do so.

Today we have what’s known as ‘Narrow AI’ – where we have many types of networks that are each designed to do specific things (e.g. image classification) really well. Where the industry is heading and will end up is with ‘General AI’. Essentially, this will be when computers start to act and think like humans. We’re not there yet, but when we get to this point, computers will be able to do every intellectual task that humans can, including reprogramming themselves (this is when you might want to get worried about Skynet and VIKI). However, many people are working feverishly to ensure those scenarios remain firmly in the realm of sci-fi. A good article on this is by @cademetz: Teaching A.I. Systems to Behave Themselves.

Machine learning is the state-of-the-art in AI today. This term describes how a machine can take in data, parse it, and make some kind of predictions based on it. The machines will learn on their own, without being explicitly programmed. Until relatively recently, we just haven’t had the processing power to make this a reality. Today it’s in use by Google, Amazon and many others.

Deep learning is a type of machine learning. The term comes from the many (deep) hidden layers of processing in the network – with tens to hundreds of hidden layers. For example, convolutional neural networks (CNNs), which are really good at image detection and classification, use tens or hundreds of hidden layers, each of which may detect something more complex – from learning how to detect edges on one layer to eventually learning to detect image specific shapes on later layers. Deep learning networks will often improve as you increase the amount of data being used to train them.

Artificial neural networks (ANNs or NNs for short) like CNNs, are basically computer-based networks of processors designed to work in some way like the human brain (or an approximation of it). NNs use different layers of mathematical processing to over time make increasing sense of the information they receive, from images to speech to text and beyond. I won’t put you to sleep by going into detail on all the different types of neural networks; in addition to CNNs, there are BRNNs, DNNs, FFNNs, LSTMs, PNNs, RNNs, TDNNs… – you get the idea. Each of these types of networks works differently to do something specific really well. If you are interested, there is a good overview at the Asimov Institute.

For NNs to actually work, they must be trained by feeding them massive amounts of data. With a convolutional neural network, this might be done for image classification. If you watched the TV show Silicon Valley, the CNN that enabled the creation of the ‘Not Hotdog’ app was shown 150,000 pictures of hot dogs, sausages, etc. in just every possible state (they actually did it!). @timanglade wrote about the process of training the network: How HBO’s Silicon Valley built “Not Hotdog” with mobile TensorFlow, Keras & React Native. Training a network for high accuracy takes a substantial amount of compute power and is often done on large arrays of GPUs in data centres.

A mockup of a ‘Not HotDog’ app

Once trained, a neural network can parse new data and make predictions in a process called inference. In the case of the ‘Not Hotdog’ app, you can aim your smartphone camera at an object to find out whether or not it’s a hot dog. To determine this, the device uses inference to quickly sort through its understanding of a hot dog (based on prior training) and will provide a probability of whether an object is or isn’t a hot dog.

Inference (and not just for detecting hot dogs!) is what our new PowerVR Series2NX Neural Network Accelerator (NNA) is really good at. And this capability will go beyond phones – we’ll start seeing the capability in drones, surveillance cameras, cars, and other areas – all of which will provide us new services based on what they can infer from their environment.

Neural networks in use

As I mentioned, different networks are good at different things. I don’t think it’s useful to highlight which network does what (this is covered exhaustively on other sites), but I do think it’s useful to understand what functions they can be trained to carry out. Some of the places where today’s NNs excel are in recognising patterns, classifying and enhancing images, and detecting/classifying faces and objects. NNs are also increasingly being used for language translation, music production, and text/speech recognition. “Alexa, are you using a neural network?” (Yes, she is.)

Amazon, Google, Baidu, Facebook and others are all already using NNs across the platforms that we use every day, from search engines to social media.

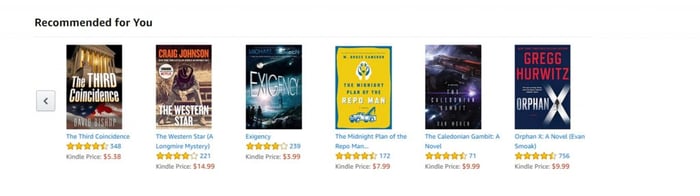

On the practical side, algorithms used by Amazon are making my shopping easier. Unfortunately, there are apparently some competing algorithms that often recommend books to me that aren’t relevant – and it’s missing many of the recommendations I get on Goodreads. I have to assume this has to do with paid placement; hopefully, they will figure out a way to make this less obvious in the future.

A few of the author’s recent Amazon recommendations based on an NN engine

A few of the author’s recent Amazon recommendations based on an NN engine

On the aspirational side, as a redhead with a very fair complexion and a family history of melanoma, I am pleased to know that there are several efforts underway to create a network that can detect skin cancer with high accuracy via a photo.

If you get bored, you can try out some of the many apps that use neural networks. Point your phone at the world around you and see textual captions of the objects in the frame.

Aim your phone at an image with human readable characters, and be amazed as the text is transformed to the human voice. You can even change your face in photographs to look younger or happier (you can also change the faces of others – just don’t use this capability to generate fake news!).

Aim your phone at an image with human readable characters, and be amazed as the text is transformed to the human voice. You can even change your face in photographs to look younger or happier (you can also change the faces of others – just don’t use this capability to generate fake news!).

A blog post from Yaron Hadad outlines some of the amazing things enabled by deep learning and has some really cool videos.

Of course, as with any technology, there is a possibility for people to misuse it. Neural networks will process the information they are fed. Whether it’s for cracking passwords, stealing money or identities or hijacking infrastructure systems, we all know that there are people around the world hard at work on these and other vicious schemes.

Looking ahead

Hopefully, my mom now understands the basics of NNs and is as inspired as I am by the myriad ways researchers are using them. As we see increasing volumes of data, and better, more affordable processing technologies, like the PowerVR 2NX neural network accelerator, deep learning technologies will find their way into virtually every area of society. They’ll power your autonomous car, run your smart home and office, make your business more productive, keep you out of the hospital, and provide you with endless hours of fun.

The author’s mom as a young nursing student in the 60s