- 08 August 2013

- Kristof Beets

GPU compute is one of the hottest topics today – but what exactly is it, and why should we all care about it for mobile devices?

Answering the first question is relatively easy, as the name already gives it away: GPU compute is about using the GPU for something which is not graphics, but instead for generalized compute (usually in the form of number crunching). This usage scenario makes sense, as GPUs have very large numbers of Arithmetic Logic Units (ALUs), and doing shader calculations (for vertex and pixel processing) is, at a basic level, no different from other more generic types of calculations.

GPU compute means highly parallel, independent processing

So does that mean that the GPU will replace the CPU which has historically been the resource for number crunching? Not quite, as another key characteristic comes into play with GPU compute and that is parallelism. GPUs are number crunching beasts, but they have been designed for graphics processing; and in this usage scenario, data elements are inherently independent. Independent means that one vertex, which forms a 3D object, does not impact the calculation of any other vertex being processed – they are independent. The same is true for pixel processing. The calculations for one pixel on the screen are not directly influenced by the calculations for any other pixel on the same screen. Again, they are inherently independent.

This independence allows for massively parallel processing, where many vertices and/or pixels can be processed in parallel. GPUs are very much designed with this parallelism in mind, and typically a GPU consists of tens or even hundreds of parallel compute engines which all independently process data. This architectural design means that GPUs are fast and highly efficient at processing generic data elements matching the graphics usage case.

Now this is exactly what makes GPU compute different from CPU compute. CPU compute has historically been dependent compute, in which the CPU is a decision engine where what is currently being processed has a strong dependency on what was previously processed. This is known as serial processing. GPUs, on the other hand, have many parallel compute units. Using these parallel units for serial compute is typically inefficient, as the inherent architectural parallelism becomes less efficient and the resulting performance would be lower. CPUs have been designed with this in mind and thus excel, as far as it is possible, at executing serial tasks.

GPU parallelism comes with another characteristic related to the handling of branching. Branching means that, as part of execution, a decision is made to run a certain set of instructions based on a test operation per processed element. This breaks the parallel behaviour as we get divergence between executed tasks. Again, this is simply linked to how GPUs are designed with many parallel engines which match GPU usage scenarios. When you have many parallel ALU engines, you need to control them, and this requires logic which decodes and tracks the instructions that need to be executed.

To allow for efficient designs, GPUs build on the assumption that many ALUs will work on independent parallel data, but that they will all execute the same instruction. This is known as SIMD (Single Instruction Multiple Data). This approach allows GPU designs to put down many ALU engines, but limit the amount of control logic overhead (in terms of silicon and power costs). But this has an impact when branching occurs, as this diverges the execution, where different data elements may end up along different branches of the program execution path. Branching occurs even in graphics processing as more complex pixel and vertex shading effects execute branches. While branching may reduce efficiency, architectures are still typically designed to handle these branches as cleverly as possible.

In summary, GPUs are very good at ultimate parallelism: lots of independent data elements, all executing the same program with minimal and ideally no divergence due to code branches. CPUs, on the other hand, are designed to handle serial processing tasks where there are constant dependencies, and branching is handled effectively.

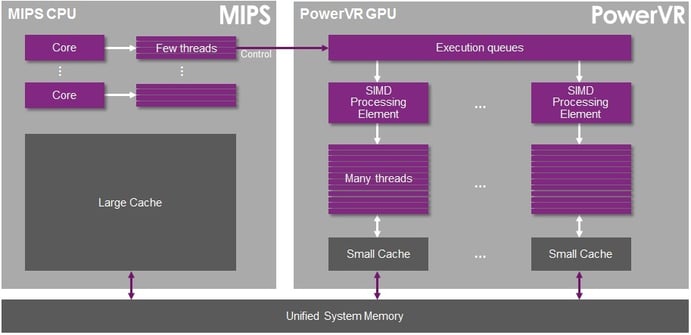

CPUs and GPUs should be considered as complementary designs, optimized for different types of processing. This impacts the hardware architecture where the CPU is optimized for flow control and low memory latency by dedicating transistors to control and data caches. The GPU is optimized for data-parallel computations that have a high ratio of arithmetic operations to memory operations, dedicating transistors to data processing rather than data caches and flow control, as illustrated below:

A typical representation of CPU and GPU architectures

A typical representation of CPU and GPU architectures

The right task running on the appropriate processor

This level of hardware optimization for specific tasks is what brings us to the “why” part of this section, as hardware architectural optimization brings with it power efficiency – the most critical element in the battery-powered mobile market segment.

Executing massively parallel tasks on a CPU works, but the throughput is fairly low because the architecture is designed to handle serial divergent tasks and hence the power efficiency of running such as task is low. On the other hand, running a highly-parallel non-divergent task on a GPU is extremely fast, as this is what GPUs were designed for. Hence, GPUs deliver enormous throughput and also very high power efficiency.

The reverse is also valid – running highly-divergent serial tasks on a GPU results in lower utilization, where many ALU units may go idle and go unused. In this scenario, throughput and power efficiency will be lower. On the other hand, running a serial divergent task on a CPU engine is exactly what a CPU was designed for, and hence hardware utilization and throughput will be high and the power efficiency will be better.

This power and performance benefit is demonstrated in the images below. They are both taken from this video showing a highly parallel compute processing task – image processing – where each image pixel is independent of all other pixels processed, using the same program and instructions with zero divergence, an optimal scenario for a GPU. Running the algorithm on our PowerVR GPU results in a much higher framerate than when using a multicore CPU implementation running at a clock frequency many factors higher:

Buffer sharing between OpenCL and OpenGL ES (top left) is supported on PowerVR GPUs; it provides better performance and lower CPU overhead

The screenshots above demonstrate both the resulting high performance and the efficiency benefit, which leads to optimized power consumption. The GPU is not only fast, it is also using far less power to achieve that higher performance, and this is critical for mobile devices, where low power consumption is critical.

PowerVR GPU compute, for parallel non-divergent processing, has been proven to deliver higher performance and better power efficiency than when using the CPU for these tasks. For other types of processing, the roles may get reversed thus making the CPU and GPU complementary processing solutions. Recognizing this split is critical going forward. There is no sense in making CPUs more parallel-capable or GPUs more serial-capable – it is far better to ensure both types of processing resources are available and optimized for their respective optimal usage scenarios and to assign the workloads to the most suitable processor available within an SoC system.