- 17 March 2016

- Imagination Technologies

Michael Kissner is an indie game developer. With a past in mathematical physics, he has always been interested in the physical aspects of light. His passion lies with computer graphics and the hope to one day fully simulate all aspects of light in real-time. Computer games have been a natural by-product and for his current game Spellwrath he has created a real-time, ray-tracing engine. Michael is currently serving as an army officer in the German armed forces.

Modern real-time graphics rely heavily on rasterization techniques to achieve high frame rates and a believable scene. The process is usually simple, take a polygon, move it around a little, draw it to some buffer and add fancy shading.

Rasterization has its ups and its downs. It’s fast, no doubt, but suffers severely when we think about multiple polygons influencing each other. What do I mean by this rather esoteric description? Well, the second we draw the polygon, it loses any sort of concept of where it is. When drawing polygons this way, we have to constantly keep track of the order we do it. Sure, we have workarounds like depth-buffers, but these won’t save us from the nightmares that transparent polygons have given us. And shadows? An even bigger horror. One, that I wish to discuss here from a completely different point of view.

Ray Tracing

In the realm of offline photo-realistic 3D rendering, an alternative method still reigns supreme. You might have guessed it from the title, ray tracing. Again, the concept is simple. We cast a ray from each pixel of the screen (the eye of the viewer) into our scene and whatever it hits is the thing we render. From that intersection / hit point we can cast more rays towards anything else that interests us. Cast it towards a Light source and we can find out if our object lies in the shadow or is lit. Cast it using the angle of incidence and we get reflection. Every ray produces a different shading effect for our pixel.

Ray tracing is pretty much reversing what happens in nature. Instead of following where each photon travels from a light source, we trace it backwards and see where it came from. Unless of course you are adding in photon mapping, but that is a story for a different day.

So, why aren’t we using ray tracing when rendering real-time scenes? The answer is speed. Finding the intersection of a ray and a scene filled with polygons can be painfully slow. And we need to do this for every pixel. Perhaps even more if we want shadows, reflections and refractions. The sad thing is, the result won’t even look that much better than rasterizing the scene with shadow maps and other effects, yet we sacrificed a ton of milliseconds each frame. To improve quality and out-do rasterization, we need even more rays, further decreasing our much beloved frames-per-second. Unless we follow the rasterization example and create our own bag of tricks!

Some tricks for real-time ray tracing

There are exceptions to the rule that ray tracing is less efficient than rasterization. When the 3D data is highly organized, ray tracing suddenly becomes easy and fast. Finding Intersections between rays and geometry is a lot easier if you only need to test for a small subset of said geometry instead of every piece in the scene. The best example for this are probably voxel scenes, especially ones that are organized into octrees (or even better, sparse voxel octrees). Turns out, with this data ray tracing is faster and magnitudes more memory efficient than a naive rasterization approach would be.

At some point in time, I decided to go down the real-time ray tracing route using voxels. However, I am not a purist and my intention was always to mix in a few techniques that I borrowed from deferred shading methods. Yet even with this “cheating” the biggest fear that had been lingering in the back of my mind was: How do I get good looking shadows?

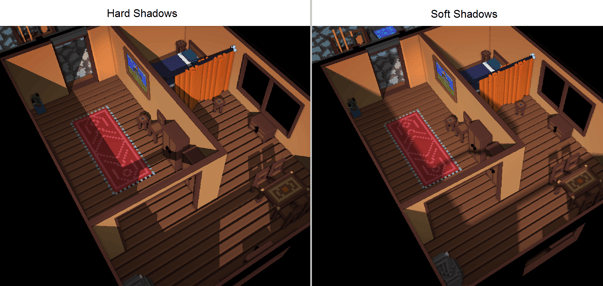

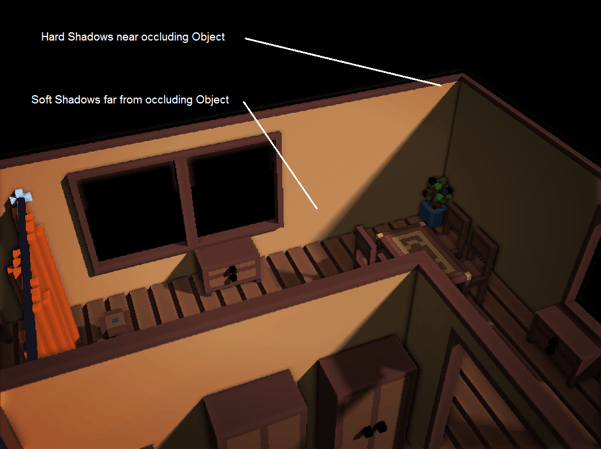

Usually, shadows rendered using a single bounce in ray tracing have a hard edge, aptly named hard shadows (seen above on the left of the image).

This type of shadow is perfectly fine for extremely tiny point lights, but those seldom occur in nature. Often light sources have at least some breadth, thus creating partial shadows / penumbras – or in rendering terms, soft shadows (to the right of the image above).

To create soft shadows in ray tracing, specifically dynamic soft shadows, usually requires the casting of multiple rays towards the entire area of a light source. This is obviously not desired when we think about real-time rendering. Our goal will always be to reduce the amount of secondary rays we cast.

Fast soft shadows

First we must realize that what we are trying to achieve are not physically accurate soft shadows. Rather, we are making an attempt at creating plausible soft shadows, which look good enough for a game or other real-time application. Thus, there is no need to cast 100’s of rays to determine, how much of an area light actually reaches a certain pixel. Instead, we will try and use a single ray cast to achieve the speed we need for real-time.

As a note, I use a slightly modified version of Imagination’s algorithm to fit my real-time ray tracer. To set things up, we will need a new buffer for our screen to store all the shadow information we get from ray tracing the light sources. Let’s just call it the ray traced shadow buffer.

After we have scored a hit in our ray tracer, the following steps are taken to trace the shadows:

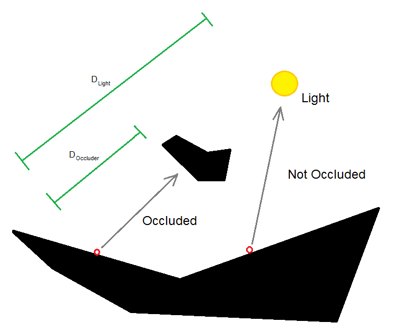

- For each pixel hit on the screen (2D), we cast a ray from it’s actual 3D position in world space towards the light source

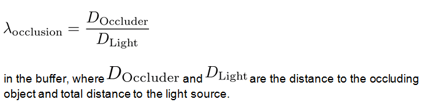

- If the pixel is occluded, we store our occlusion value

- If the pixel is not occluded, we store a value of 1.0 in the buffer

Pretty straight-forward, isn’t it? For hard shadows, this would already be sufficient to shade all occluded parts. Simply add some lighting to every pixel with a buffer value smaller than 1.0.

As hinted at earlier, there is nothing that stops us from mixing in a few methods commonly found in rasterization techniques into real-time ray tracing. One method that is very useful for what we are doing is deferred shading. Therefore, the actual shading step of each pixel that we have traced will be done post ray tracing:

- Determine if pixel is occluded (ray traced shadow buffer entry smaller than 1.0). If it is occluded, continue with 2.

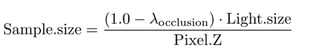

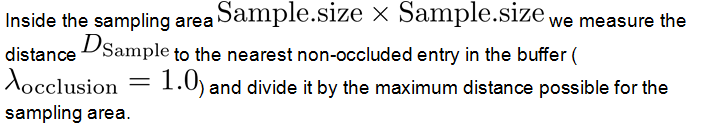

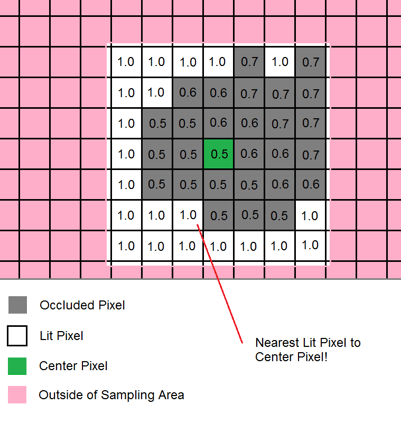

- Determine the sampling size (for our ray traced shadow buffer)

In theory, this sampling size tells us how large the penumbra would be in this area on the screen, if there were one. It therefore also tells us what area we must check to see if there is a penumbra. Obviously we need to factor in some perspective, which is done by dividing by the depth value for that pixel.

Please note I have simplified the perspective transformation here to a simple division by the z-value, ignoring the fact that the penumbra might be larger in screen space due to areas of the shadow being closer to the viewer. It does make sense at this point to artificially increase the Sampling Size by some constant factor to account for this effect.

For simplicity sake, let us assume the viewer is looking at the shadow area in a perpendicular way, i.e. the z-value is the same for each pixel in the sampling area.

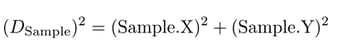

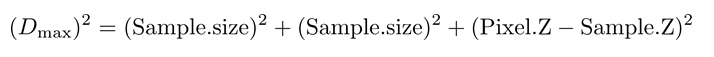

The square of the maximum distance thus reduces to

We are left with a simple grid search, looking for the nearest lit pixel by comparing and finding the pixel with the smallest squared distance

How often we sample the grid is up to us and how much performance we wish to sacrifice at this point. More samples will lead to a smoother penumbra.

Please note it is possible at this stage to again include the z-value of each pixel to create a more accurate shadow, that is not depended on the angle the viewer looks at it. For this, we don’t compute the Dmax value beforehand, but rather, we need to compute it as we do our grid-search, as we don’t know the z-value yet of the furthest possible point.

In addition, we need to incorporate the z-value inside our distance to the nearest lit entry.

This is quite some additional computation for each pixel. While it does add some very nice accuracy and most importantly, independence of the viewing angle, it can be neglected to boost speed. At least I do.

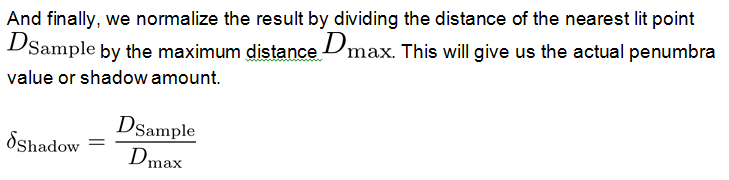

- We now have the distance from the occluded pixel to the nearest lit pixel inside some confined area. It actually tells us the strength of the shadow at that specific point, where the extremes are

or

- We use this value to shade the pixel using standard lighting methods.

With this method, using soft shadows over hard shadows only yields a very minor performance penalty, since we are doing the ray trace for each pixel either way. It’s good to see that the loss in speed is independent of the amount of objects or the size of the scene and solely dependant on the screen size and the density of samples we wish to perform.

But why aren’t we simply blurring the edge of the shadows to create the wanted softness? Well, we won’t get this cool effect:

Conclusion

With this method I was able to easily implement soft shadows inside my real-time ray tracer with minimal effort. The shadows are fully dynamic and require no pre-computation whatsoever. The only further memory requirement came from the additional framebuffer that is needed to pass the shadow data from the ray tracer to the post processing system.

If we are to slowly transition into a wider adaptation of ray tracing in real-time applications, we must begin by at least matching the graphical quality of modern rasterization techniques. I do think we are on the right track in doing so.