- 17 May 2021

- Imagination Technologies

Welcome to the first in a series of articles where we explore how Imagination and Humanising Autonomy, a UK-based behaviour AI company, are teaming up to deliver practical, real-world AI-driven active safety.

Go here to read part 2

We have talked at length about the steps that Imagination takes every day to make our world safer with automotive AI. Today we see advanced driver assistance systems (ADAS) that have AI at their core. From simple, dynamic satnav route planning, through lane keep assist, to full autonomous driving stacks, drivers are gradually becoming equipped with more ways than ever to take the stress out of their journeys.

But until we reach full autonomy the driver still needs to pay attention – after all, there is no replacement for human intuition. That feeling that a pedestrian is going to step out in front of you when they are not looking, or the sense that a cyclist ahead might swerve while taking a drink from their water bottle is something uniquely human; something unfathomable to an AI algorithm – or is it?

As humans we are excellent at recognising human behaviour: we can often anticipate these things before they happen. But, despite some of us believing we are “rebels without a cause”, the reality is that many of our actions can be predicted by behavioural science. Understanding how our neurons fire and turning that into code that a neural network can emulate however, are two very different, and very difficult things.

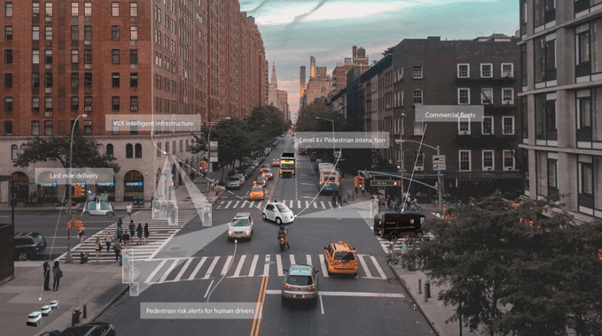

Humanising Autonomy, a UK-based behaviour AI company, makes software that understands people. Their unique methodology combines behavioural psychology, statistical AI and novel deep-learning algorithms to understand, infer and predict the full spectrum of human behaviours. This approach takes into account human factors and digital elements together, for true automotive safety. Humanising Autonomy has developed an algorithm that can not only identify pedestrians on the street, but who among them might be about to present a risk to the driver or themselves.

Humanising Autonomy’s Behaviour AI Platform enables fast analytics of past, present, and future human behaviour for ADAS solutions that are more accurate than ever before. A 10x reduction in false positives means confident intent prediction for increased productivity and smoother, more efficient journeys. The Behaviour AI Platform builds a complete picture of what is happening outside the vehicle so that drivers can make more informed, safer decisions.

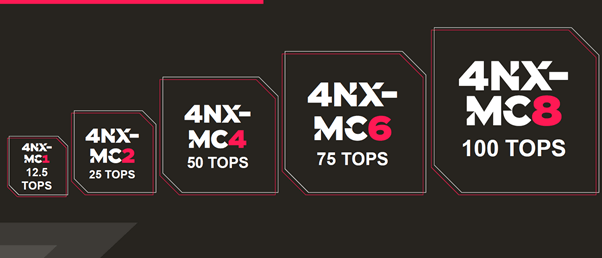

Just as Imagination’s range of low-power, scalable, embedded NNAs are setting the standard for performance without compromise within the thermal and power confines of the automobile, Humanising Autonomy is breaking new ground to deliver world-leading and innovative predictive safety algorithms – it’s a winning combination.

Humanising Autonomy’s algorithm was developed initially using the CUDA library. The testing and validation of its algorithm was carried out on large, non-specialised graphics processing units (GPUs) and the numbers looked good. We wanted to see what those numbers might look like on an embedded automotive NNA, and, following three months of testing and validation, we believe the results speak for themselves.

With less than 1% of the power draw and 2% of the silicon area of the large GPU, we were able to achieve a similar, viable runtime speed – and this is using an SoC that features our PowerVR Series2NX NNA, first announced in September 2017. Our current IMG Series4 NNA is now available to license, and would offer a runtime performance that would be significantly better than this and an order of magnitude beyond what can be expected of a GPU.

Analysing and adapting millions of lines of code to remove the proprietary CUDA libraries and references sounds like a Herculean task. But, thanks to Humanising Autonomy’s streamlined software development kit (SDK), Imagination’s engineers were able to do just that in a very short period. Through a period of analysis, and with close cooperation with Humanising Autonomy’s team, Imagination’s engineers moved through the code-based removing or replacing the relevant CUDA references. This enabled the algorithm to work on our highly-efficient, automotive-specific NNA vs a power-hungry desktop GPU solution.

So, what’s next? The lead time for today’s innovations to make their way into the automotive production chain is measured in years, but when a solution like this could save lives, waiting years is too long.

Thanks to the embedded, low-power, small-form-factor IMG Series4 NNA, an aftermarket unit that can be retrofitted and added to today’s vehicles could be the perfect solution. Many road users buy a dashcam installed by professionals to provide a safer driving environment and more accountability on the road – so why should ADAS safety features be any different?

Often on a drive, it’s not the destination, but the journey that is the most interesting. The story of Imagination and Humanising Autonomy’s work together runs much deeper than a single blog post can cover. Over the coming weeks, we will be featuring interviews with some of the brilliant minds at Humanising Autonomy, lifting the lid on exactly how we untangled CUDA and delivered such high performance in such a small package, and explore how the future of ADAS and autonomous driving is driving ever closer.

Go here to read part 2

Download to learn how bandwidth-saving Imagination Tensor Tiling (ITT) technology inside the IMG Series4 provides real-world benefits for accelerating neural network models